ByteDance has introduced its new AI system known as OmniHuman-1. The new system is able take a single photo of a subject and transform their likeness into a video of them speaking, singing, and even moving naturally. Even with the right number of digits.

OmniHuman-1 is impressive in the sense that the videos even show the AI-generated individuals capable of making gestures that match even their speech patterns. “End-to-end human animation has undergone notable advancements in recent years,” the ByteDance researchers wrote in a paper published on arXiv. “However, existing methods still struggle to scale up as large general video generation models, limiting their potential in real applications,”

Understandably, getting OmniHuman-1 to its current state wasn’t easy. ByteDance says that its team of engineers and developers had trained OmniHuman on more that 18,700 hours of human video data, using a combination of different inputs – text, audio, and body movements.

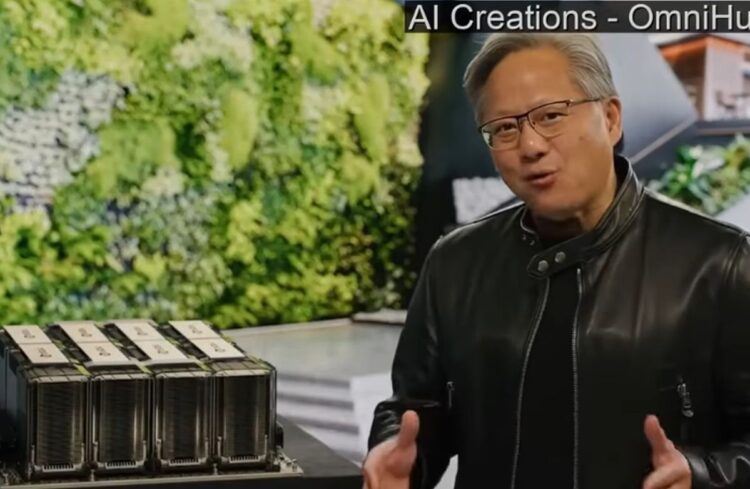

The end result is the ability to create incredibly lifelike videos of famous individuals. Some examples that were provided by the parent company of TikTok include a reconstruction of a younger Albert Einstein talking about science, NVIDIA CEO Jensen Huang wrapping, and even Taylor Swift singing in Japanese.

OmniHuman-1’s capabilities to create hyperrealistic videos marks another milestone in the global race of AI dominance. Many industry experts say that such technology could eventually transform the way companies go about transforming entertainment production, education, and even digital communications. That being said, it also raises concerns on the potential of it being misused by threat actors.

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.