With generative AI being the latest trend in consumer tech, it’s no real surprise that companies with a stake in the game are doing research on ways it could be made better. This would be especially true for large language models (LLM), arguably the most promoted – and probably also most used – form of generative AI. The bitten fruit brand naturally has a horse in this race, with its own Apple Intelligence, and the company has been working with NVIDIA, and old-timer in comparison, for research to make LLMs better.

Engineers from both companies have shared details on their collaboration, on their respective brand’s blog. Their research is centred around what is called Recurrent Drafter, or ReDrafter, that Apple published and open sourced earlier in the year. This is described as a new method for generating text with LLMs that is both faster and more efficient, combining beam search and dynamic tree attention.

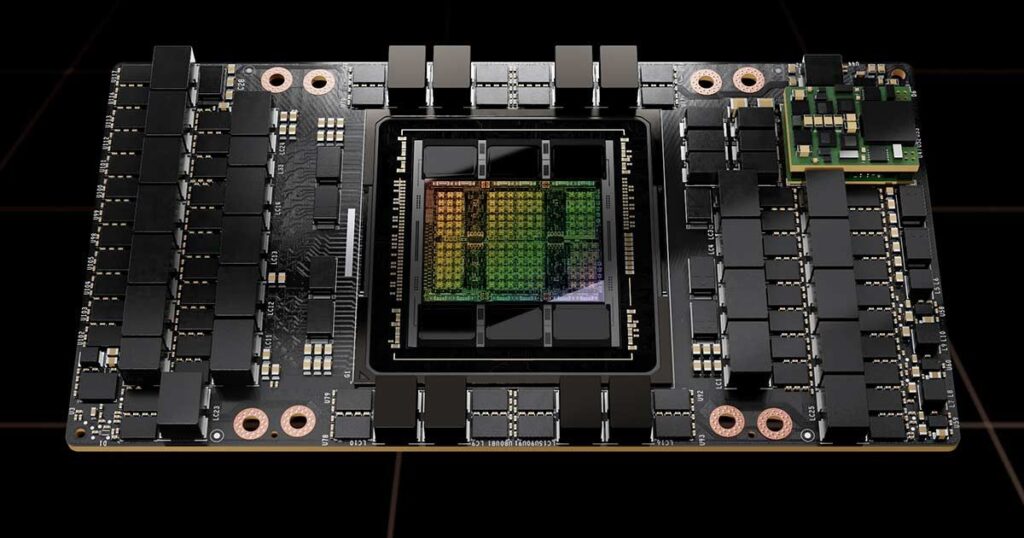

Which is all pretty overwhelming, but the gist of it is that by integrating Apple’s ReDrafter into NVIDIA’s TensorRT-LLM tool – that helps LLMs run faster on Team Green’s GPUs – tokens are generated up to 2.7x faster. This means not only reduces latency that users may experience, but also makes the process more power efficient, and needing less GPUs in the process.

Overall, the takeaway here is that by using these specific things in generative AI can make the tech more efficient, and therefore cheaper. And since Apple’s bit is open sourced, it may get wider adoption, with similar benefits also going around. For the nitty-gritty, you can read the blog posts by both companies, linked below.