Meta has announced its latest advancement in generative AI, unveiling a video generator dubbed “Movie Gen” that can create high-definition footage with sound. The new tool, which remains in the research phase, follows closely behind OpenAI’s Sora, a competing text-to-video model.

Movie Gen offers the ability to produce realistic videos at 16 frames per second (fps) or 24 fps, with up to 1080p resolution, and integrates sound effects and background music. While the footage is currently upscaled from a 768 by 768-pixel base, it promises to be a significant leap forward in AI video generation. When it comes to video quality, initial results from Meta’s A/B testing suggest that Movie Gen outperforms both OpenAI’s Sora and Runway’s Gen3 models.

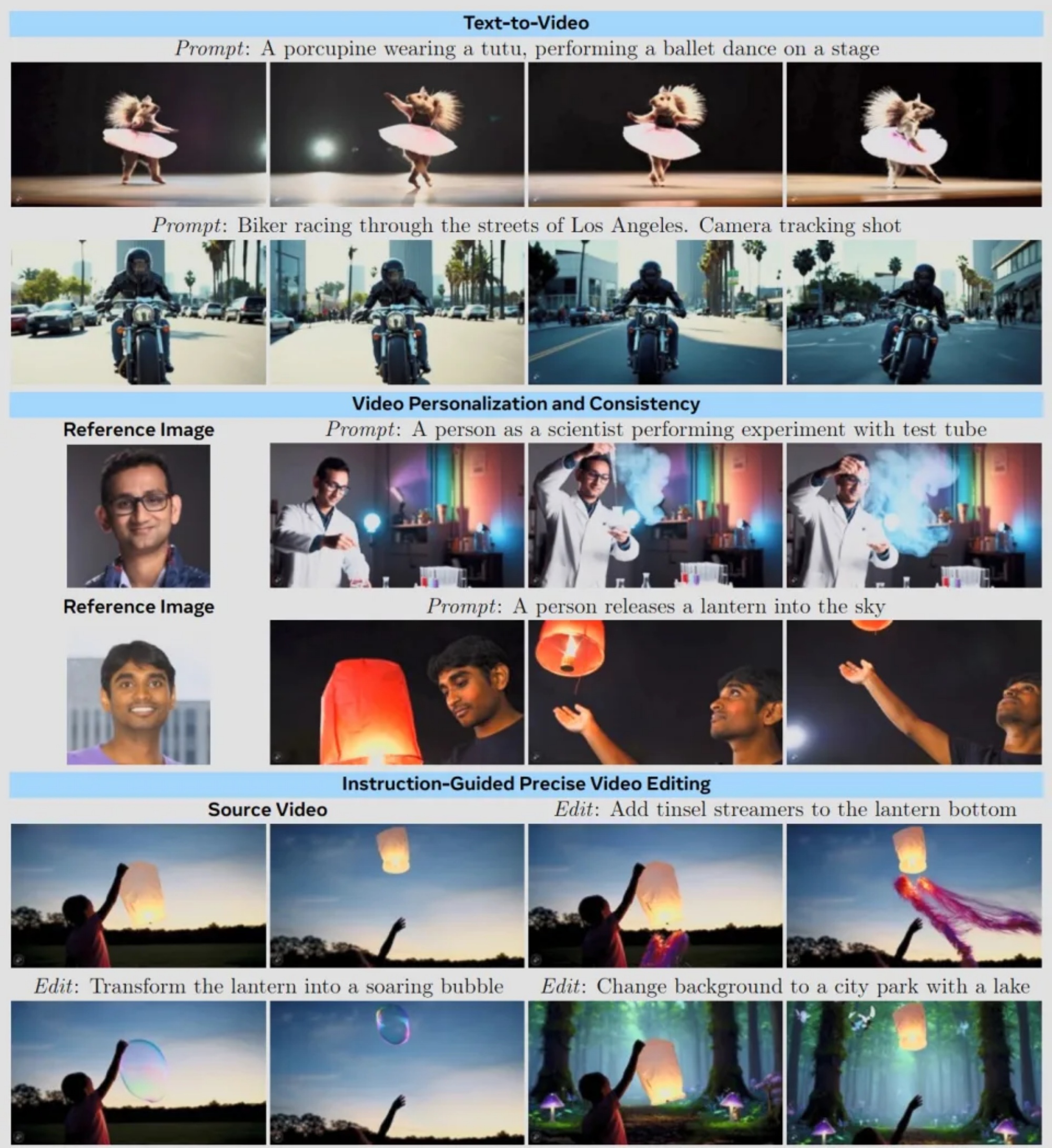

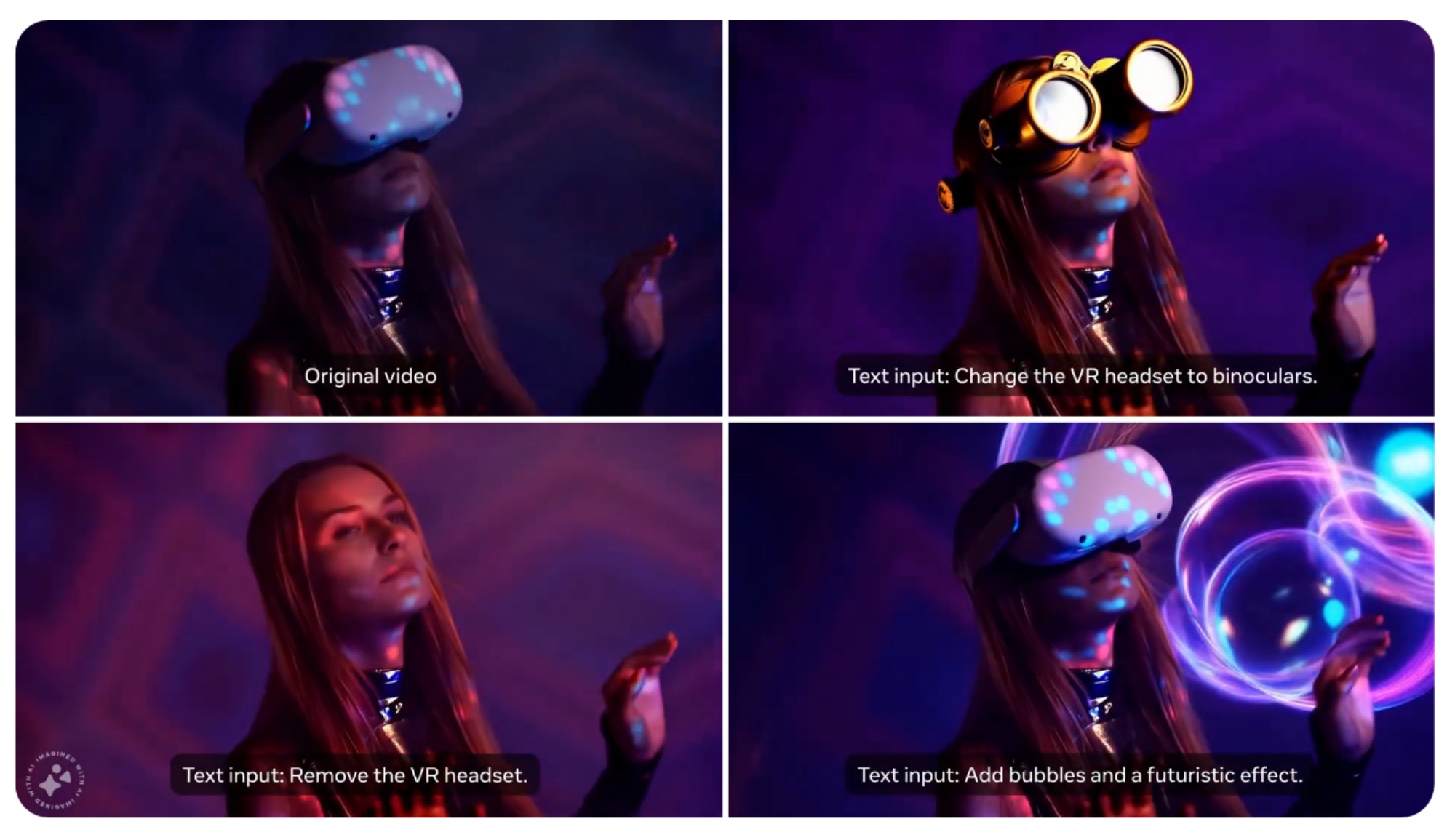

Movie Gen can generate personalised content when a photo is uploaded and even allows for the editing of standard, non-AI videos using text-based commands. According to Meta, the tool represents the “third wave” of its generative AI research, following earlier tools like Make-A-Scene and Llama-powered models that focused on media creation.

At its core, Movie Gen is powered by a 30-billion-parameter model, accompanied by an audio model that has 13 billion parameters and can produce up to 45 seconds of 48kHz content, including ambient sounds, Foley effects, and instrumental music. Interestingly, there is no voice synchronisation support yet, which Meta attributes to design choices in the current model’s development.

Training Movie Gen required an extensive data set, which Meta adds uses a combination of licensed and publicly available resources. This included approximately 100 million videos, one billion images, and a million hours of audio. Despite the company’s transparency about the general scope of its training materials, the specifics remain somewhat unclear, which raises concerns about the sources used for training, particularly in light of its previous admissions about using Australian users’ data to train its AI models.

Meta says test audiences reportedly found Movie Gen’s results to be more realistic, with fewer of the typical AI-generated video flaws, such as unnerving eyes or distorted fingers. Despite this, the company emphasises that Movie Gen and similar AI technologies are not designed to replace human artists or animators. The tech giant stresses that these models are intended to augment creativity and provide new avenues for self-expression, particularly for individuals without traditional artistic tools or skills. On that note, Movie Gen is not yet available to the public, giving both Meta and the broader AI community time to assess its potential applications and implications.

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.