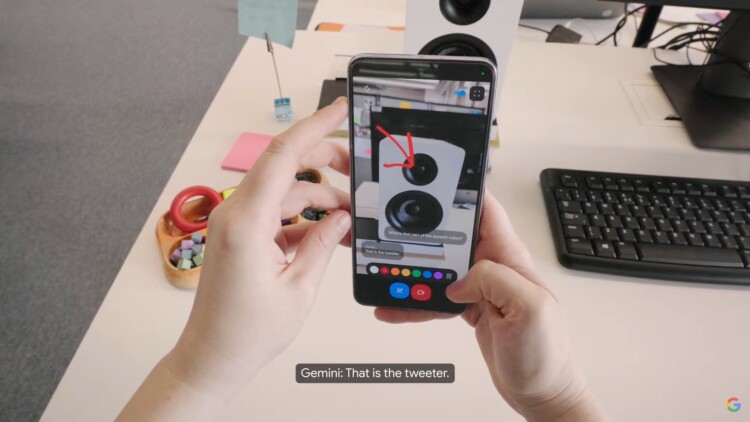

Remember the teaser that Google put up on X, previously Twitter, about an AI model that’s very similar to OpenAI’s GPT-4o? With its announcement at Google I/O out of the way, the internet search giant has revealed that particular implementation of its Gemini AI model to be what it calls Project Astra.

If you’ve seen the demo of GPT-4o from earlier, then you’d have mostly seen what Project Astra can do thought its own demo. Though in comparison, Project Astra is depicted in said demo as notably less long-winded than GPT-4o. If you still feel that responses are too long, the option to interrupt is still there.

One thing of note from the demo though is that it is showing Project Astra being run from a Pixel phone to a smart glass of some sort. Which is an interesting thing to see considering it already sent its Project Iris AR glasses project, what was supposed to be the second coming of the Google Glass, to the graveyard.

On that note, the implementations of Project Astra is coming to consumers via Gemini Live. Accessible via the Gemini app, it is shown to be natively multimodal, much like GPT-4o. Google says that this is coming later this year, but no specific date was provided. Based on the keynote, it doesn’t look like Gemini Live, and the bits of Project Astra available through it, will be locked behind the premium version of the AI model.

As we mentioned with GPT-4o though, demos are always made to look impressive, and it remains to be seen if Project Astra will be as impressive as it is portrayed to be. One demo for the Google Gemini video search has already been spotted to be providing erroneous suggestions to a problem.

(Source: Google / YouTube [1], [2])

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.