NVIDIA officially announced Blackwell, its newest and most powerful AI-focused HPC GPU, during GTC 2024. To put it simply, this new GPU is designed to run real-time generative AI on trillion parametre large language models (LLM) at 25 times less cost and energy consumption than its predecessor, Hopper, so says NVIDIA.

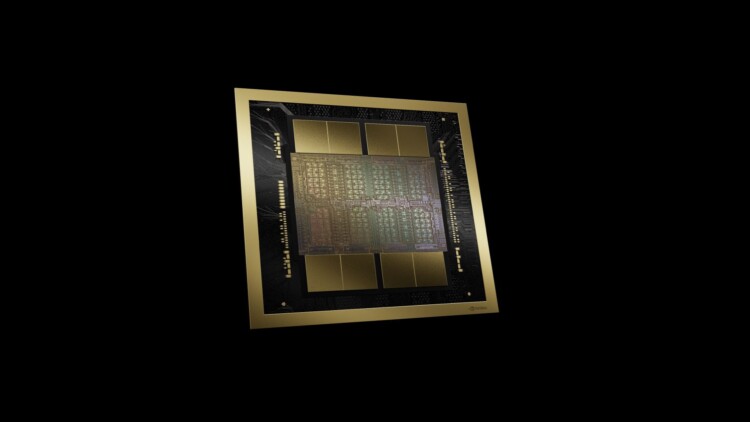

Specs-wise, the new Blackwell GPU is built using a custom 4NP TSMC process node, with two-reticle GPU dies that are connected via a 10TB/s chip-to-chip link, running into a single, unified GPU. Further, it comes with up to 192GB of HBM3e memory, delivering 8TB per second of HBM bandwidth, and has 1.8TB/s of NVLink connection between multiple Blackwell GPUs.

The Blackwell GPU also sports a new 2nd generation Transformer Engine, fueled by new micro-tensor scaling support and the GPU brand’s latest and advanced dynamic range management algorithms that are integrated into NVIDIA’s TensorRT-LLM and NeMo Megatron frameworks. Further, the new GPU will support double the compute and model sizes with new 4-bit floating point AI inference capabilities.

Moving on, Blackwell is also the first HPC GPU to introduce and support NVIDIA’s 5th Generation NVLink, which as we mentioned earlier, can deliver up to 1.8TB/s of bandwidth, especially when linked to other Blackwell GPUs. Other features include a dedicated RAS Engine for reliability, availability, and serviceability.

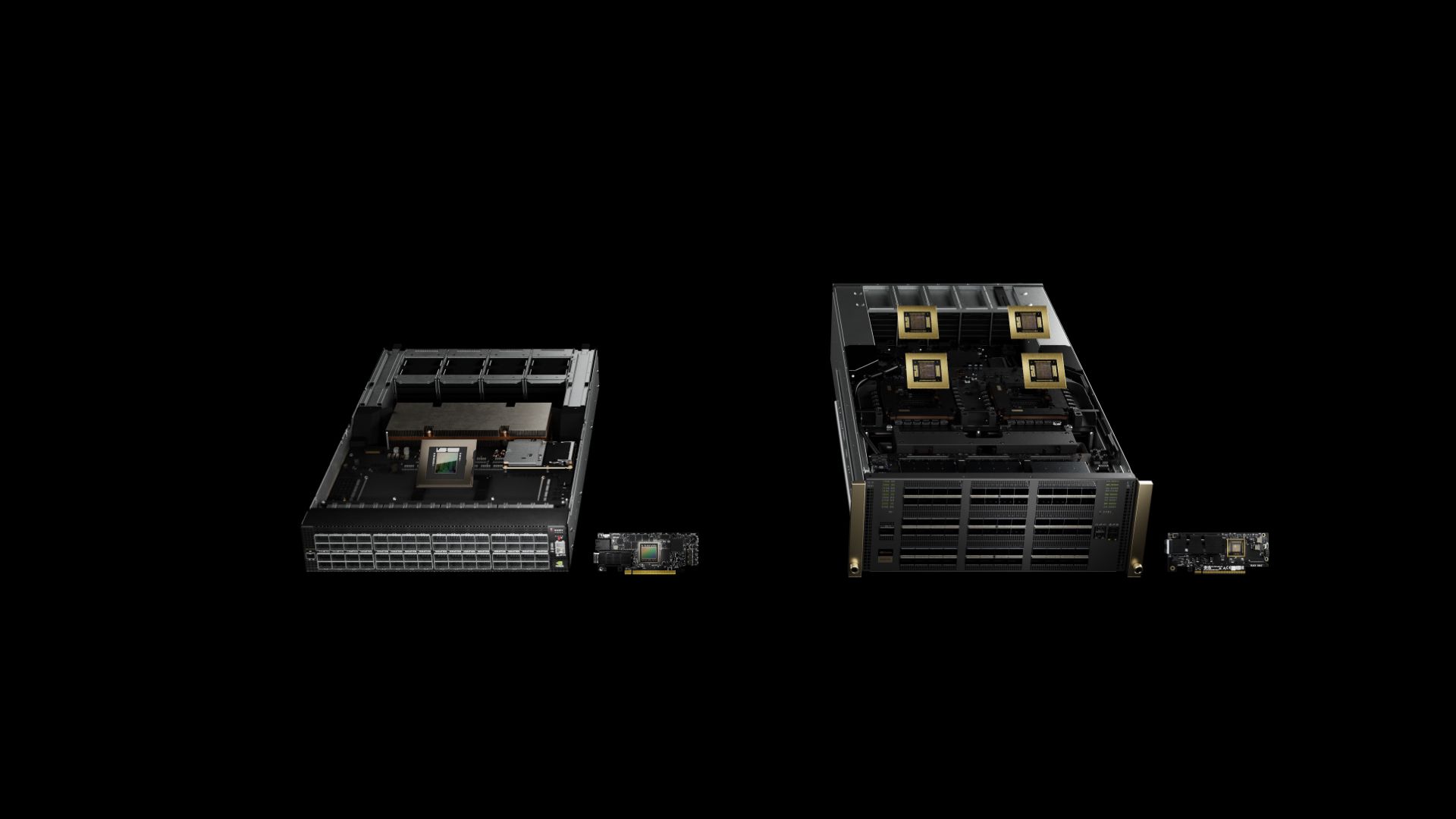

As for distribution, NVIDIA’s new GPU will be shipping out as part of the GB200 Grace Blackwell superchip, which connects two B200 Tensor Core GPUs to the Grace CPU. Further, it is a key component in the GB200 NVL72, a multi-node, liquid-cooled, rack-scale system that is designed for the most compute-intensive workloads.

In addition, NVIDIA is offering the HGX B200, a server board that links eight B200 GPUs through NVLink and supports x86-based Generative AI platforms.