Apple has released an early stage version of its image editing AI model, known as Multimodal Large Language Models-Guided Image Editing (MGIE). As noted by VentureBeat, it is currently accessible through GitHub, while a demo hosted on Hugging Face Spaces (shown above) is also available if you’re interested to try the tool out.

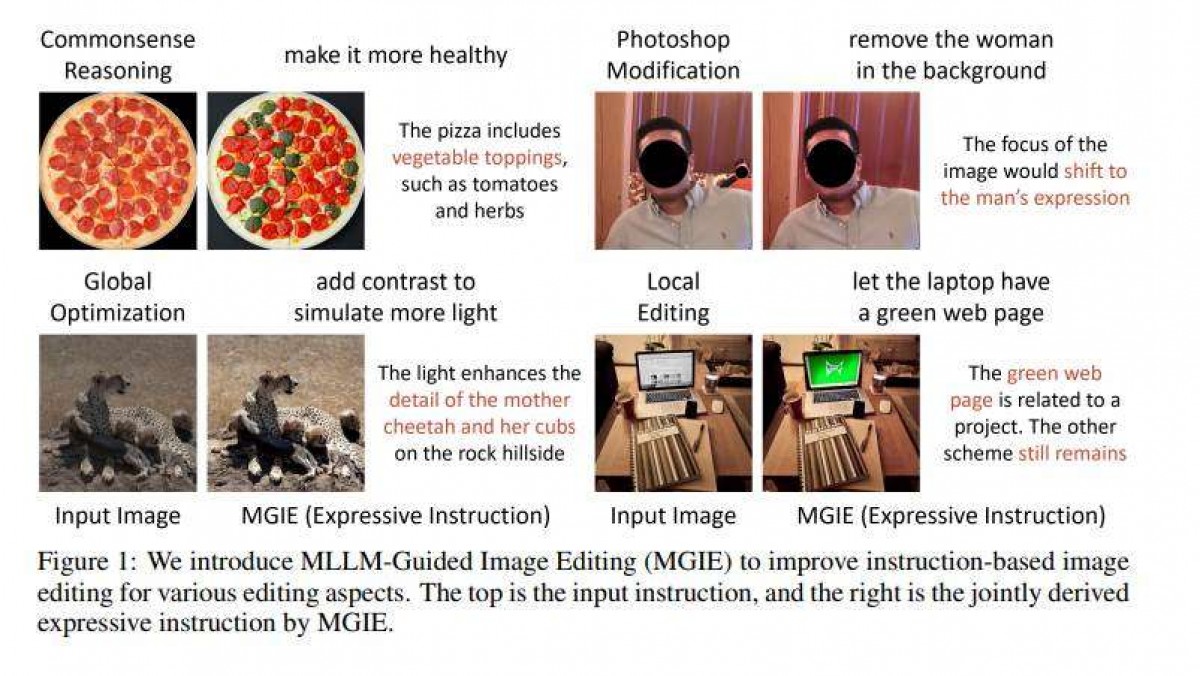

The company’s image editing AI model leverages MLLMs (multimodal large language models) to interpret textual commands for manipulating images. According to its project paper, MGIE excels at transforming simple or ambiguous text prompts into precise instructions, facilitating clearer communication with the photo editor. For example, a request to “make a pepperoni pizza more healthy” could prompt the tool to add vegetable toppings.

Beyond image alterations, MGIE can also handle fundamental image editing tasks such as cropping, resizing, and rotating, as well as enhancing brightness, contrast, and color balance – all of which through text commands. Moreover, it can target specific areas within an image, modifying details such as hair, eyes, and clothing, or eliminating background elements.

While MGIE represents the initial phase of Apple’s foray into generative AI, it is not anticipated to be integrated into existing Apple devices. However, its introduction suggests a glimpse into the future direction of the company’s AI endeavors.

(Source: VentureBeat)

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.