If you’ve spent any time last week on X, previously Twitter, you may have come across AI generated deepfakes of Taylor Swift. These depict the singer in spicy situations with only body paint for clothing amongst an American football crowd, likely something to do with her current boyfriend, Travis Kelce. No surprise that the Elon Musk-owned platform sees this as a problem – ironic, that – that the rebranded bird app has blocked searches for the artist.

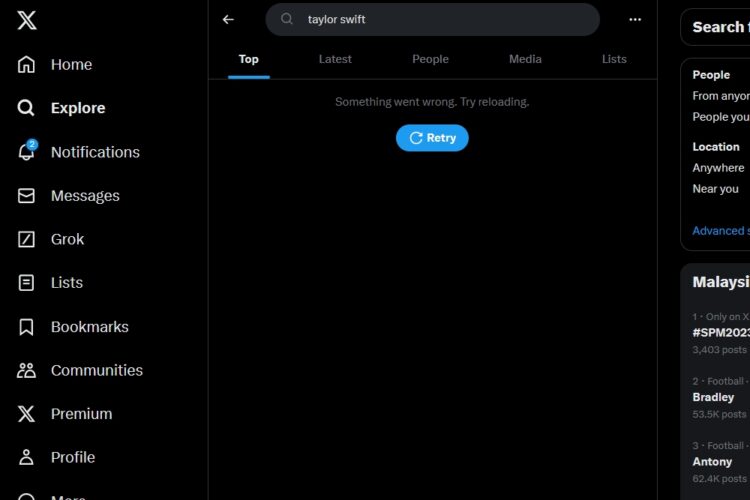

Performing a search for Taylor Swift now leads to the same error message as when you have spotty internet connectivity, prompting a refresh, which naturally does nothing. Wall Street Journal reports that X head of business Joe Benarroch says that “this is a temporary action and done with an abundance of caution as we prioritise safety on this issue”.

In a post on one of its own official accounts, X says that it has a “zero-tolerance policy” for non-consensual nudity. It goes on to say that it is “closely monitoring the situation” and is actively removing such content, but does not mention Taylor Swift by name.

On one hand, the response from X is the expected one. But on the other, some may be of the opinion that the response was not as swift, pun not intended, as it should be. Which isn’t exactly surprising, considering that as part of the acquisition by Musk the platform let go of a vast majority of its staff, including those who were supposed to keep an eye on stuff like this.

Another report by 404 Media claims that these generated images of Taylor Swift originated from 4chan, as well as “a specific Telegram group dedicated to” creating such images. The images are also made using Microsoft’s generative AI tools. In an interview with NBC News, Microsoft CEO Satya Nadella says that while the company has guardrails in place to prevent such content from being produced, the company will have to move fast to add more.