Virtual assistants like Siri work off of voice commands, but that can sometimes be accurate due to a multitude of reasons. This could be as simple as a noisy environment, or more culturally, accents that the virtual assistant was not sufficiently trained to recognise. An Apple patent has described a potential solution to such systems, in the form of virtual lip-reading.

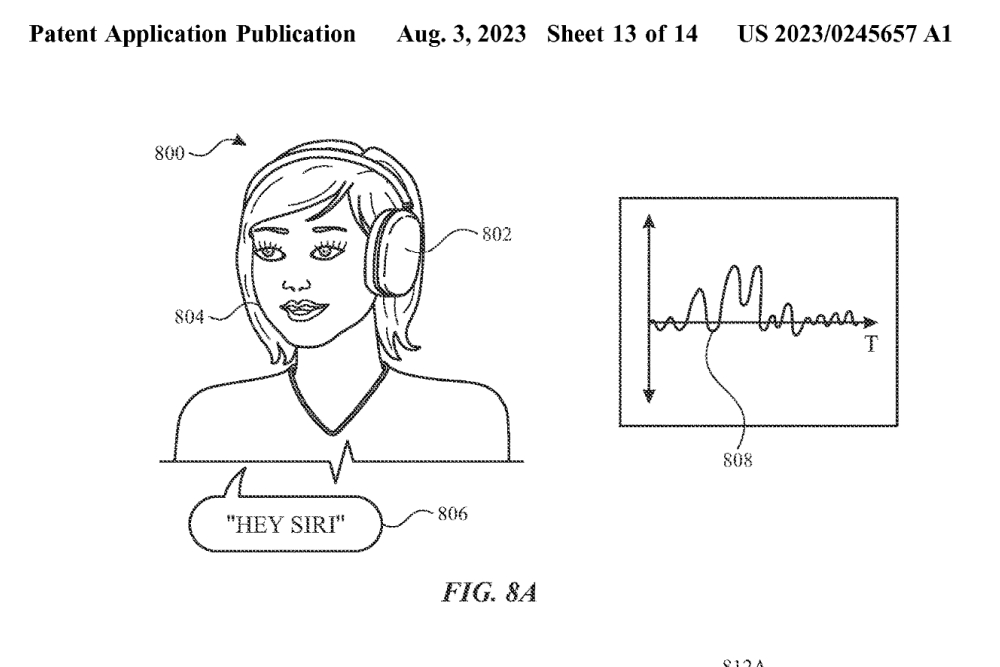

The patent itself is titled Keyword Detection Using Motion Sensing. Which, as explained above, reads the motion of a user’s lips, as well as head movements, and then cross-references a library of motion data for spoken words. It is a pretty unconventional way to do it, but the idea is sound.

Oddly enough, this Apple patent doesn’t describe this as a way to improve or supplement Siri. Instead, it may be a replacement for the system entirely. The patent explains that having to constantly listen to prompts like “Hey Siri” drains power and processing capacity, especially when the user is not actively using the feature. Switching to motion sensing, using accelerometers and gyroscopes for the detecting, is claimed to use less power than audio sensing with mics.

Making use of motion sensing for pseudo voice commands, especially as it involves gyros and accelerometers, probably means that the AirPods would be the primary beneficiary. There’s the possibility of it working with the Apple Vision Pro as well, with the mention of smart glasses in the patent. But as always, patents don’t necessarily make their way to actual products.

(Source: USPTO)

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.