By now, you’ve probably heard of OpenAI’s chatbot, the aptly named ChatGPT, and how Microsoft recently incorporated it into Bing. Hell, we’re willing to be that you’ve actually use the chatbot to do your homework for you or even have it write up that report your boss has been hounding you to get it over it.

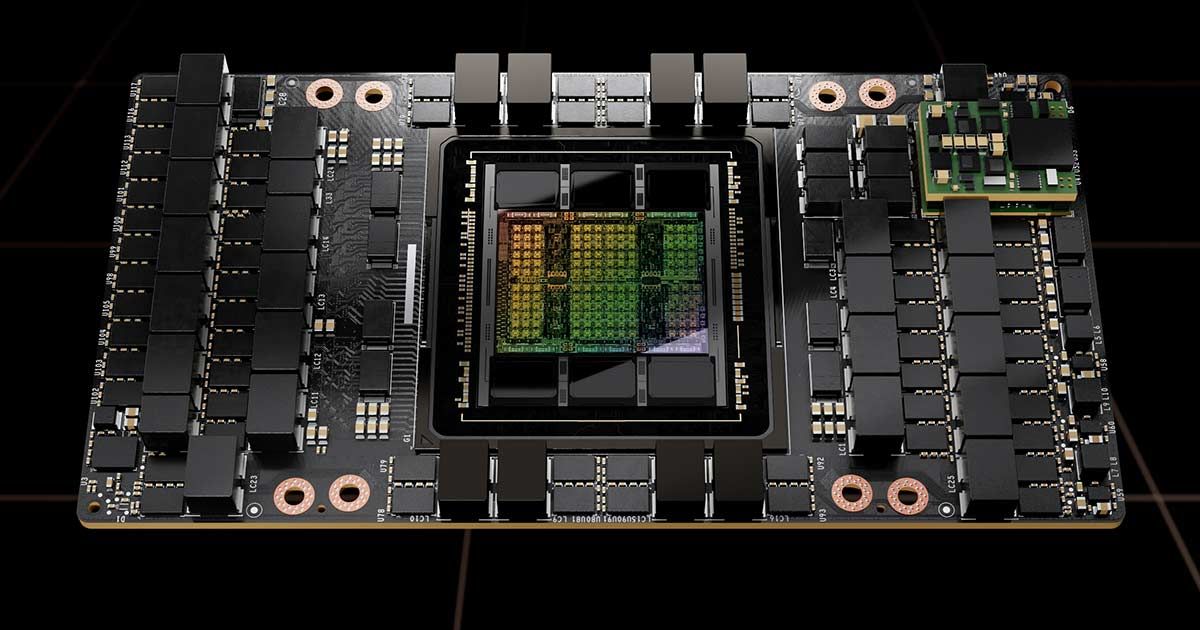

On that note, and getting back to Microsoft, the software company and Windows OS owner’s Azure division recently upgraded its cloud platform with its new ND H100 v5 virtual machines (VM). The new supercomputer, which is now the driving force behind its generative AI project, is powered by thousands of NViDIA H100 GPUs, all of which are interconnected through the GPU maker’s Quantum-2 InfiniBand networking. Specs-wise, the breakdown of each VM is as listed below:

- 8x NVIDIA H100 Tensor Core GPUs interconnected via next-gen NVSwitch and NVLink 4.0

- 400 Gb/s NVIDIA Quantum-2 CX7 InfiniBand per GPU with 3.2Tb/s per VM in a non-blocking fat-tree network

- NVSwitch and NVLink 4.0 with 3.6TB/s bisectional bandwidth between 8 local GPUs within each VM

- 4th Gen Intel Xeon Scalable processors

- PCIE Gen5 host to GPU interconnect with 64GB/s bandwidth per GPU

- 16 Channels of 4800MHz DDR5 DIMMs

Again, the new Microsoft Azure supercomputer will be used to train conversational and generative AI applications. Like all things in life, training is necessary if one wishes to become better or more adept at a situation, and it is no different when it comes to making AI more responsive and providing them with new capabilities. Such is the case of OpenAI’s ChatGPT; the increasingly popular chatbot, which found itself in the spotlight for a multitude of applications, is expected to become more fluent in natural language as the new NVIDIA H100 GPUs continue to train it, as well as interact with more users over time.

“NVIDIA and Microsoft Azure have collaborated through multiple generations of products to bring leading AI innovations to enterprises around the world. The NDv5 H100 virtual machines will help power a new era of generative AI applications and services.” Ian Buck, Vice President of hyperscale and high-performance computing at NVIDIA, says.