You can’t blame most Malaysians, even the social media addicts, for not knowing what Parler is. Yet, tech giants Apple, Google, and Amazon have all recently banned the app from their platforms, citing concerns that it was being used to promote violence. Why all the fuss and why should I care, you may ask?

Because the Parler fiasco raises an incredibly important question that resonates not just in the United States, but Malaysia too. That is; should online platforms be held (legally and morally) responsible for content posted by their users?

Before its (effective) demise, Parler regularly promoted itself as a freewheeling “free speech” alternative to far more moderated rivals Twitter and Facebook. Its critics say the app was used by rioters to plan, encourage, and execute the violent breach of the US Capitol building on 6 January.

For years, social media platforms have been accused of not doing enough to curb everything from terrorist propaganda and recruitment (think ISIS videos) to conspiracy theories and fake news to hate speech and incitements to violence. US President Donald Trump is arguably a repeat offender in the latter two categories, but big tech previously refrained from censoring any of his posts, despite intense and mounting criticism.

For years, social media platforms have been accused of not doing enough to curb everything from terrorist propaganda and recruitment (think ISIS videos) to conspiracy theories and fake news to hate speech and incitements to violence. US President Donald Trump is arguably a repeat offender in the latter two categories, but big tech previously refrained from censoring any of his posts, despite intense and mounting criticism.

In 2018, in explaining why it still hadn’t banned Trump, Twitter said, “Blocking a world leader from Twitter or removing their controversial Tweets would hide important information people should be able to see and debate.” Suffice to say, the company’s thinking has clearly changed since the recent US Capitol riots, which the American president is accused of inciting. By (finally) banning Trump now, Twitter is effectively conceding that it has the moral responsibility to police the content of its users – even a sitting US president.

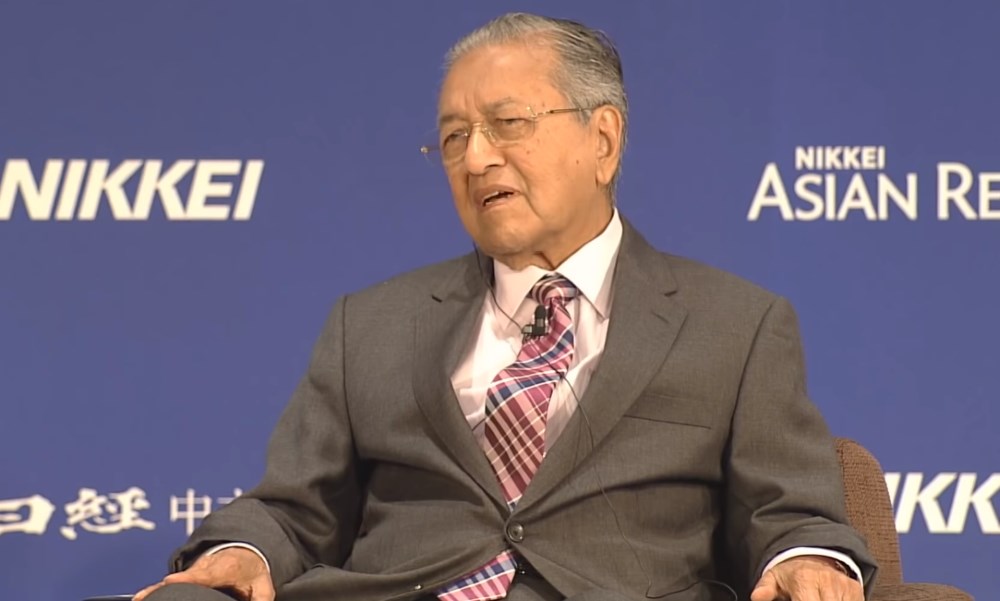

But this is not so unprecedented. Closer to home, former prime minister Mahathir Mohamad got in trouble with Facebook and Twitter last October over his posted remarks regarding extremist violence in France. His posts stated that, “Muslims have a right to be angry and kill millions of French people for the massacres of the past,” were swiftly deleted by both social media platforms. Might his comments have survived if they were posted on Parler instead?

Responding to Apple’s ban of the app, Parler CEO John Matze snapped, “Apparently they believe Parler is responsible for ALL user generated content on Parler. Therefor (sic) by the same logic, Apple must be responsible for ALL actions taken by their phones. Every car bomb, every illegal cell phone conversation, every illegal crime committed on an iPhone, Apple must also be responsible for.”

Responding to Apple’s ban of the app, Parler CEO John Matze snapped, “Apparently they believe Parler is responsible for ALL user generated content on Parler. Therefor (sic) by the same logic, Apple must be responsible for ALL actions taken by their phones. Every car bomb, every illegal cell phone conversation, every illegal crime committed on an iPhone, Apple must also be responsible for.”

But if apps like Parler have shown every reluctance to moderate user content or act against problematic content flagged by its hosts and users, how are we to think of platforms that remove illegal or offensive user content once they are made aware of them? Should they still be held responsible?

Again, closer to home, local news portal Malaysiakini is currently being tried for contempt of court for comments posted by its readers. The portal said that it removed the problematic comments shortly after it was made aware of them. But the prosecution argued that this was insufficient – the comments should’ve been taken down sooner, and Malaysiakini should’ve done more to moderate comments.

The Federal Court, the nation’s highest court, is scheduled to rule on the case this Friday, but a delay is possible due to the new MCO. Regardless of the court’s decision, this ongoing debate over freedom and responsibility (especially who is responsible and by how much) is certainly a global one, certainly consequential, and certainly far from over.

(Image: Reuters via BBC)

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.