Google is expanding the applications of the Google Lens, allowing its machine learning algorithm to interact with the real world in real time. Until now, the image recognition system was mainly limited to Google Assistant and Google Photos; but it will soon be expanded to integration with the Android Camera app and Google Maps.

In the coming weeks, Android users will be able to point their phone cameras at objects in the real world and see information cards appear on the display. A Style Match feature will allow Google Lens to identify clothes and accessories, and then match them with similar items that fit the style.

Similarly, Real Time Results allows users to point their cameras at things to receive Google search results. This could be things like concert posters for information about the performer, or at menus to figure out what unfamiliar food items are.

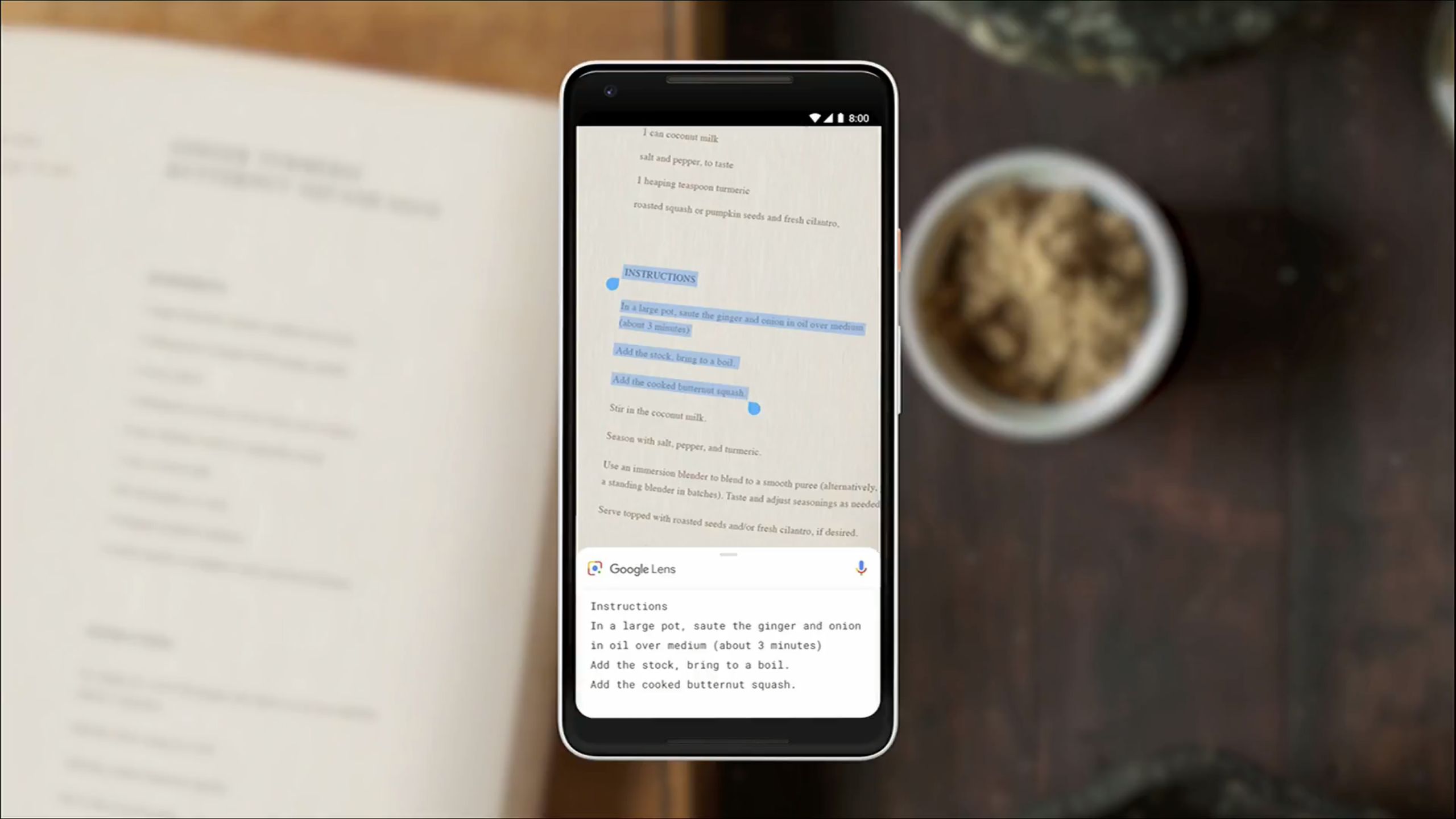

Google Lens in the Camera app is also capable of recognising text in the real world. Allowing users to copy text directly to the clipboard from whatever they are pointing their phones at.

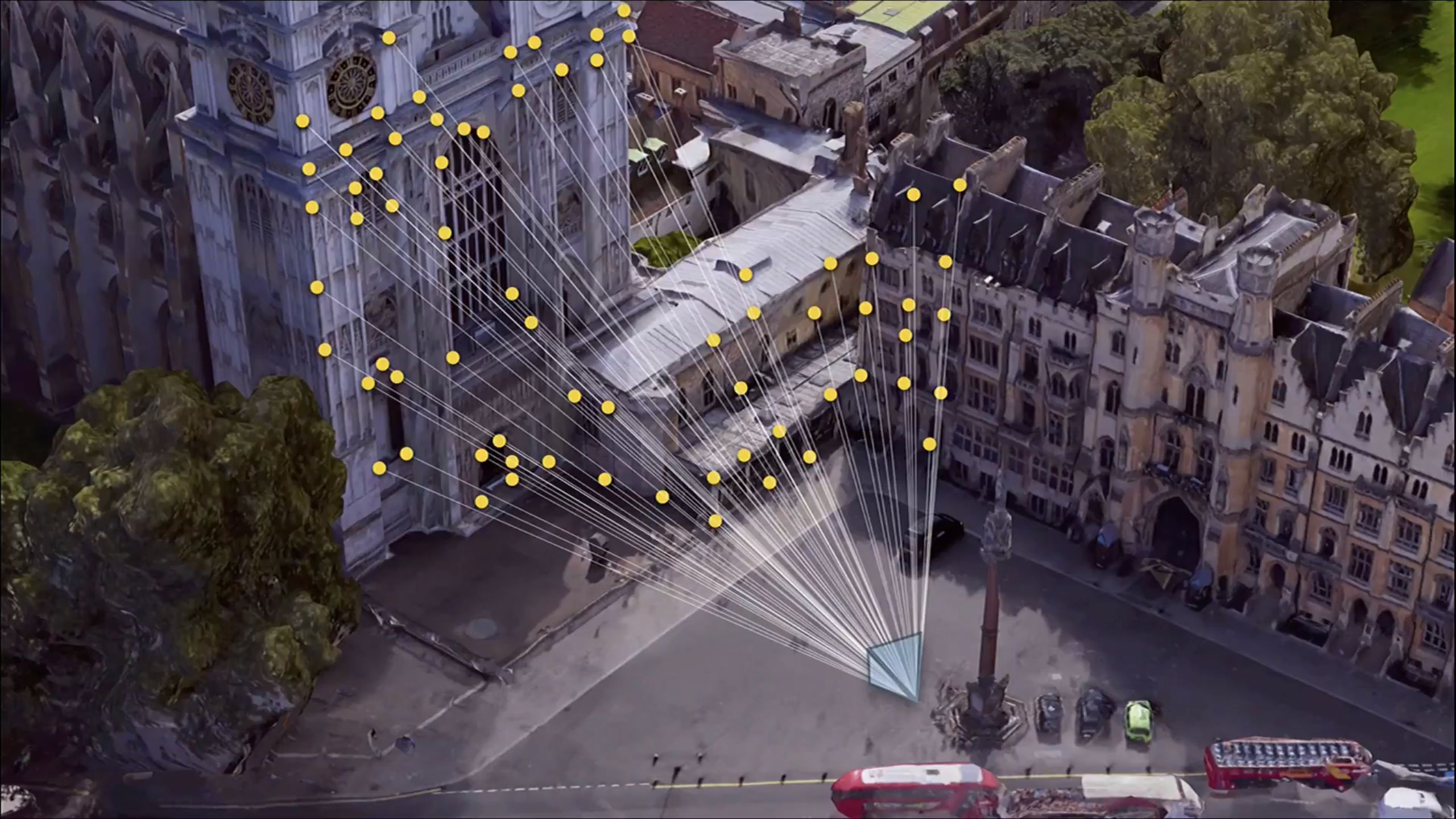

A more advanced application of the Google Lens is the integration with Google Maps to achieve what the company is calling a Visual Positioning System or VPS. It’s essentially a complementary system to GPS which uses the camera to establish the user’s location using landmarks.

According to Google, this is done by the Google Lens being able to recognise specific buildings through Street View, and then measuring the distance to those buildings. Combined, this gives a more accurate location than only relying on satellite based GPS.

It also allows the Google Maps navigation to display on-screen navigation through Street View. Clearly showing users which direction to turn based on what the smartphone is looking at the moment.

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.