UPDATE: The original version of the article assumed that the tech has powered Google Pixel 2’s Portrait Mode. It has now been updated to reflect that it is not the same tech although it might has the same capability.

One of the main attraction behind Google Pixel 2 is its Portrait Mode even though it is equipped with only one camera. As expected, the implementation not exactly straight forward and involves several techniques including semantic image segmentation.

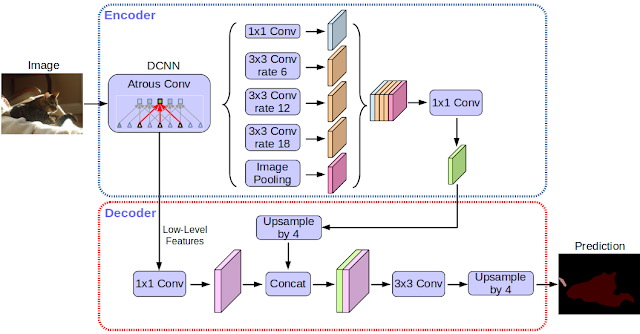

This particular technique helped differentiate the background and the subject within the image via machine learning. This helps the creation of the bokeh or the blur effect coveted by casual shooters.

It is also the same technique that is the focus of DeepLab v3+ which has just been open sourced by Google earlier this week. Being in development for the past three years, the latest iteration of the tech apparently offers improved boundary detection over previous DeepLab models.

To be clear though, DeepLab is not the exact tech that was used to power Pixel 2’s critically-acclaimed Portrait Mode even though there are some similarities between them especially the fact that both of them take advantage of TensorFlow.

Meanwhile, the team behind DeepLab hope that making the tech as open source would help the academia world and industry grow it further. Additionally, they also hope that other people would find other usages for it as well since the tech clearly has potential to be used in other applications rather just blurring out the background in a photo.

(Source: Google Labs)

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.