Google I/O 2017 is in full swing, and one of the most interesting new features announced at the developer conference is Google Lens. Essentially, this new feature is a much more powerful Google Goggles, and it allows Google Assistant – and other Google apps – to give users contextual information based on what the smartphone camera is pointed at.

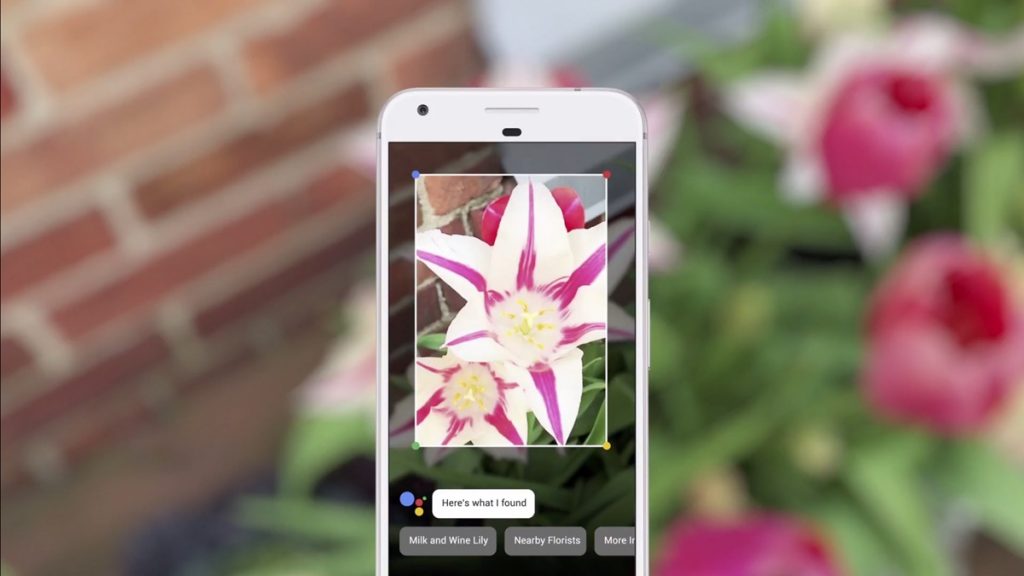

In practice, this means Google Assistant will be able to identify the world around you. Point the camera at a flower, for instance, and Google Assistant will give you more information on what type of flower it is. You can even point the camera to a WiFi network’s password, and the virtual assistant will automatically connect you to it. How cool is that?

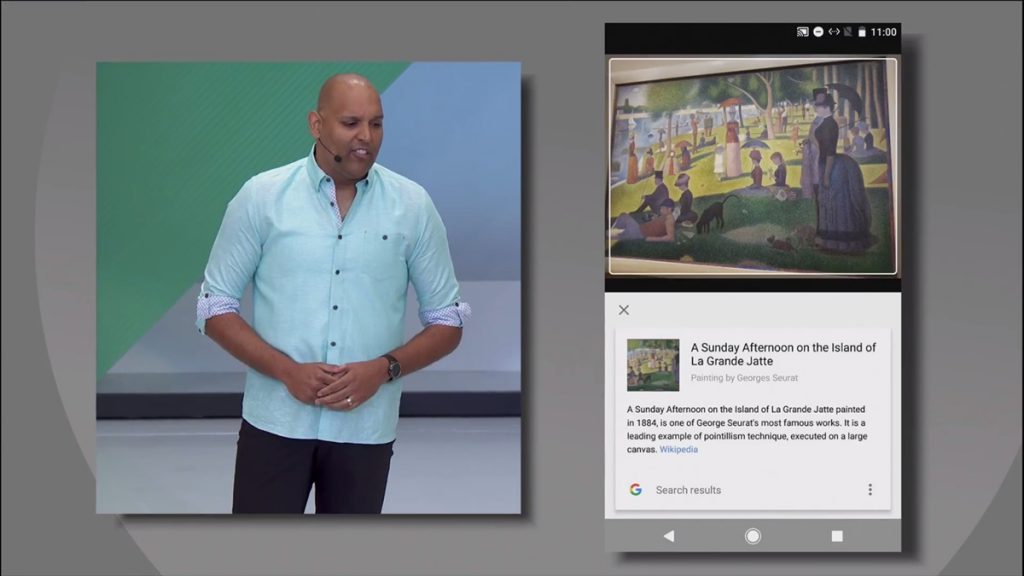

Google Lens is not limited to just Google Assistant either: the feature will also be implemented in Google Photos. Say you took a picture of a painting some time ago: all you have to do is view it in Google Photos, and activate Google Lens. The feature will then automatically identify what kind of painting it is and give you relevant information.

Based on these examples, Google Lens will definitely prove to be useful in various scenarios. For the time being, Google Lens will only be implemented in Google Assistant and Google Photos in the coming months; the feature will come to other Google products in the future.

Follow us on Instagram, Facebook, Twitter or Telegram for more updates and breaking news.