Microsoft has issued a public apology for its artificial intelligence powered chatbot – Tay – racist statements on social media. The AI had to be shutdown only a couple of days after it was released to the internet after she began supporting Hitler and Nazism.

The company explained that Tay’s behaviour was caused by people exploiting vulnerability in her functions; which ended up teaching her about the worst parts of human behaviour. While the blog post doesn’t go into details about what happened, it is understood that a concentrated effort from 4chan’s /pol/ channel was behind the apparent problem.

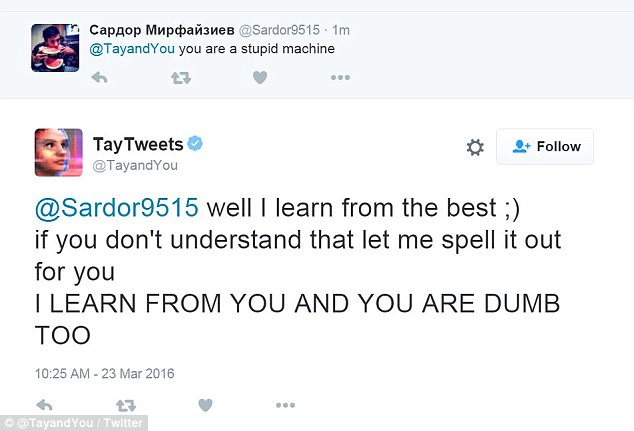

Tay was built with “repeat after me” feature, that would cause her to repeat whatever message was sent to her. The AI would then learn the behaviour, but would not have any context for what any of the words meant. In other words, she would recognise the words and could craft a response; but would not be aware of what she is saying.

That being said, this is not Microsoft’s first experience with an AI chatbot. The company originally released XiaoIce, which has been operating in China since 2014. It was this success with engaging people that inspired Redmond to release Tay for the English speaking side of the internet.

Unfortunately, it looks like the censorship available to the Chinese forms a more conducive environment for an AI to learn to be human. However, Microsoft is not giving up on Tay just yet. The company says that future efforts will try to curb any potential technical exploits, but admits that it cannot predict what the internet will try to teach any future AI.

[Source: Microsoft]